Tracking Microfluidic Droplets in ImageJ

2 August 2013

I am researching microfluidic droplets this summer. We run aqueous fluid through a flow-focusing region in a micro-channel, which causes little drops to shear off and flow through the chip. We observe the drops with an inverted microscope and the high-speed Andor Zyla camera.

I am developing a plugin for ImageJ to track the droplets. We want to see their shape, velocity, orientation, area, and other geometric properties, but it takes a long time to track them by hand (and it’s silly, when we have computers).

The problem is that these droplets are hard to track. They have a similar brightness to the background, their borders aren’t always well-defined, and they move quickly relative to the speed of the camera. So I am brainstorming new ways to isolate the droplets from the background.

Right now, the tracker relies on various steps of removing noise, subtracting static background pixels, and binarizing the image to convert the droplets to black binary blobs on a white binary background. The tracker itself just observes these binary blobs, so the important work happens before the tracker is even involved. The way in which we convert these noisy, grainy images of real-world droplets into representative binary form directly affects the quality of the tracking results.

I have some thoughts on how to do better. Right now, I am treating each still frame in the stack as independent from the others. The filters are stateless, in that they work the same on each frame regardless of what frames came before or after in the stack (in time).

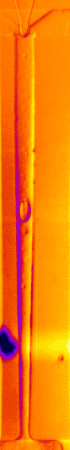

But the droplets are moving, so I should be able to use that crucial bit of detail to massively improve the tracker. When I, a human, see the following image (a photo of a droplet in a channel), I can guess where the droplet is. I expect something oval-shaped, somewhere in the center of attention. But if I look at the image without any bias, I really don’t know whether that droplet is part of the background or not.

I am biased, in that I look for simple geometry. It’s what my vision is trained to do well. However, as soon as I see the next frame in the stack, it’s obvious what I can ignore as the background.

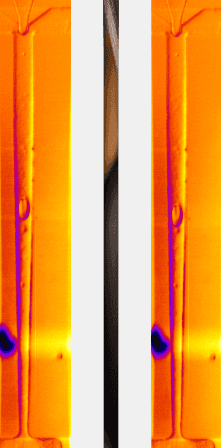

The droplet in the center moved down a bit, but everything else stayed still. I can easily isolate it from the background with my eye, especially when it’s moving. So maybe I can get the tracker to do the same.

Using a subtraction, I can find the literal difference between the later and earlier frames shown above. This yields a “heat map” of everything that moves in the images.

The blue area represents the moving front edge of the drop. Unfortunately, it isn’t round, and it doesn’t look like a drop, because the subtraction removed everything except the change.

When a droplet moved between frames, most of it (by area) will seem to stay still. Only the front and rear edges will change. So this subtraction can’t be the full solution, but maybe I can use it as a place to look for drops.

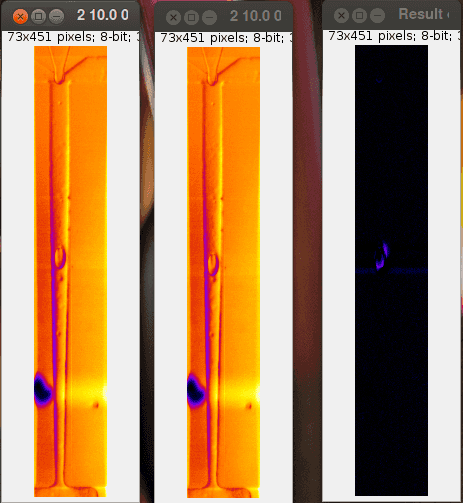

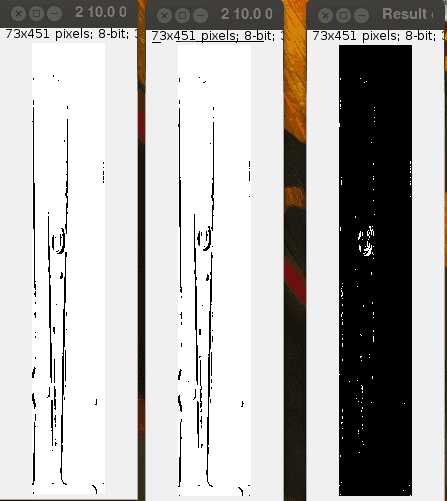

Interestingly, I can do the same on the binary version of the image.

The two left images above are the messy, thresholded representation of two of the original images (not exactly the ones above, by similar). I can make out the droplet in the center, but so much mess surrounds it that the tracker gets confused.

The image on the right is the result of XORing the two left images. Where one has a dark pixel, and the other doesn’t, the XOR is illuminated. This yields a robust hot spot where the drop is moving, and it also creates some noise from the blinking background pixels (an artifact of the thresholding).

It isn’t directly trackable, but I hope I can work it in to my system soon.